LLaVAC: Fine-tuning LLaVA as a Multimodal Sentiment Classifier

Abstract

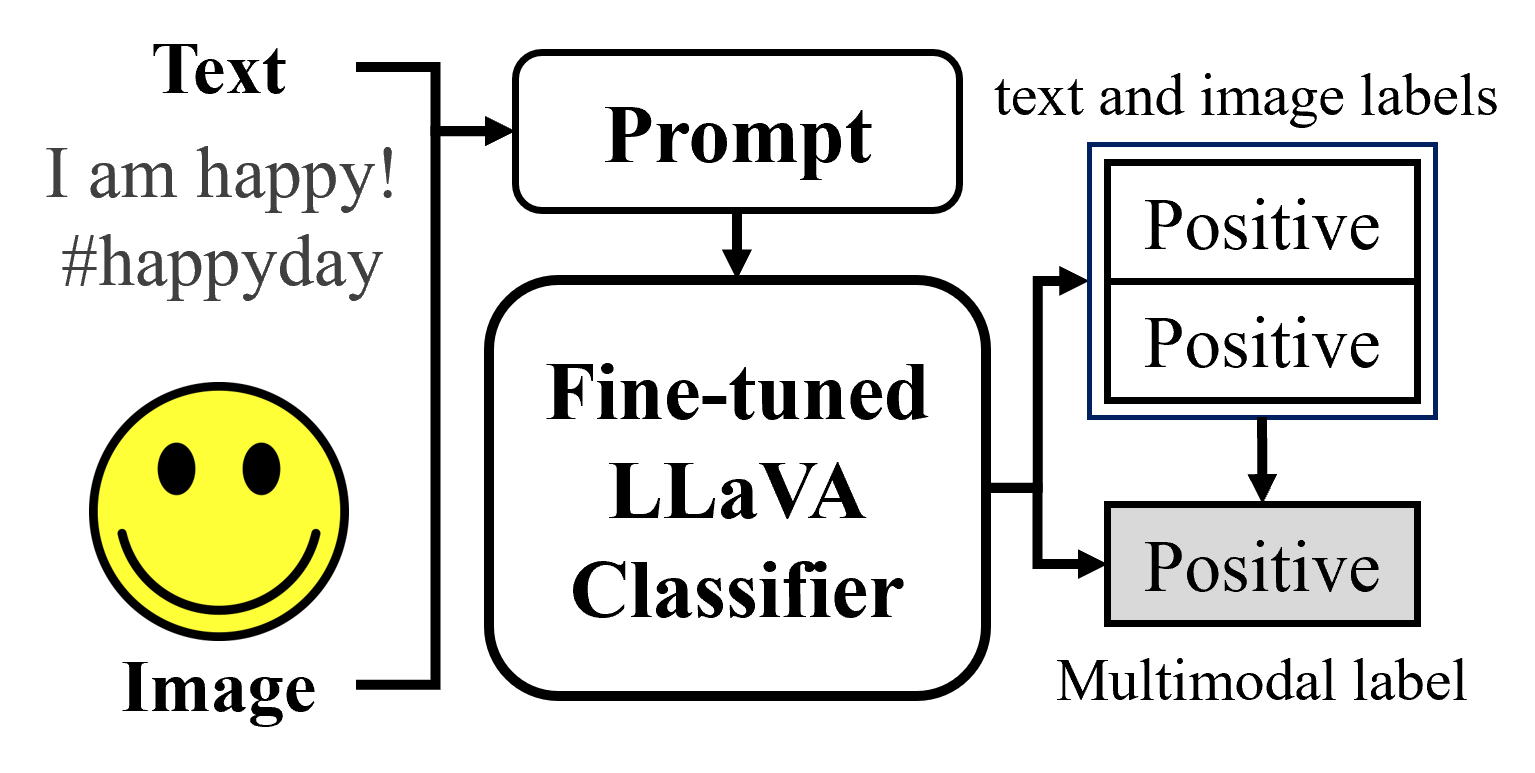

We introduce LLaVAC, a method for constructing a classifier for multimodal sentiment analysis. This classifier is capable of classifying both text and image modalities by performing fine-tuning on the Large Language and Vision Assistant (LLaVA). In this work, we design a prompt to consider unimodal and multimodal labels and fine-tune LLaVA for classifying multimodal sentiment labels by generating predicted labels. Our method outperforms baselines by up to 7.31% in accuracy and by 8.76% in weighted-F1 in the MVSA-Single dataset across three dataset processing procedures.

Further reading:

- Click here to download the PDF version of this work.